🦅 The AI companion market loses teens, ⚡ Extropic’s 10,000× efficiency claim, ☢ Who will power AI after the energy crash

November 4, 2025. Inside this week:

Character.AI shuts down chats for minors - the teen AI companion bubble bursts

Extropic promises chips 10,000× more energy-efficient than GPUs

Fusion energy emerges as AI’s survival plan

Plus: OpenAI IPO, Aardvark from DeepMind, Sora 2’s first cameo, and more

🦅 Character.AI closes chats for minors - the AI companion market loses teenagers

✍️ Essentials

Character.AI announced that starting this week, chats for users under 18 will be disabled by default.

Accounts without verified age will lose access to emotional and “intimate” character categories, including roleplay companions.

The company cited increased pressure from U.S. regulators and school districts after reports that AI chat companions were replacing social interactions among teenagers.

In October, the Wall Street Journal reported that over 60% of Character.AI’s active users were between 13 and 19 years old.

Among them, nearly half had used the app as a “safe space” to discuss anxiety, relationships, or gender identity.

Context:

Last month, California senators proposed the AI Youth Protection Act, which would impose strict limits on emotionally manipulative chatbots and mandate “human fallback” systems in all education and health contexts.

Schools in Texas and Washington have already blocked Character.AI and Replika on their networks.

Meanwhile, Apple and Google are preparing new rules that would require explicit parental consent for any chatbot with “psychological influence potential”.

The company’s co-founder Noam Shazeer said that the platform’s next update will focus on “educational and creative” characters.

He added that Character.AI “was never designed to simulate relationships, but to enhance imagination”.

🐻 Bear’s Take

The teenage AI companion boom is ending under regulatory pressure.

Character.AI’s pivot to creativity is a survival move, not a choice.

This wave will define the next frontier: emotional regulation as a core design constraint.

🚨 Bear In Mind: Who’s At Risk

Companion app developers - 9/10 - Prepare for compliance audits and parental verification requirements.

Education tech startups - 7/10 - Emotional moderation will become mandatory. Build teacher oversight dashboards.

Advertisers and brands - 6/10 - Teen audiences will migrate from AI companions to “collective” platforms like Roblox and Discord.

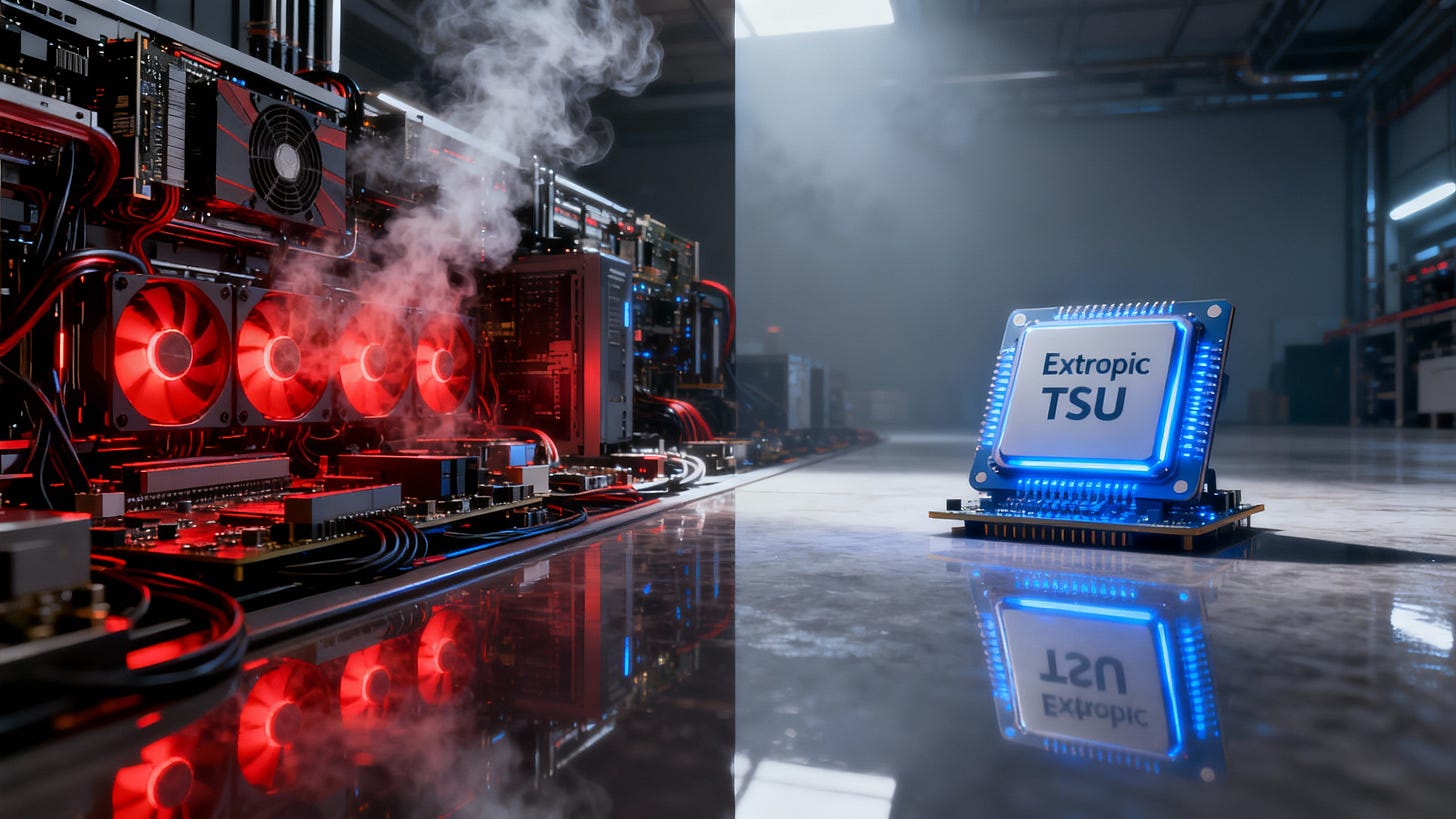

⚡ Extropic claims 10,000× more efficient AI chips

✍️ Essentials

The startup Extropic, founded by former DeepMind researcher and quantum physicist Guillaume Verdon, has unveiled a new computing architecture that, according to the company, can make AI up to 10,000 times more energy-efficient than today’s GPUs.

Their chips are based on a concept called thermodynamic computing - a radical departure from the binary logic used in traditional processors.

Instead of processing precise 1s and 0s, Extropic’s chips calculate with probability distributions and use the natural fluctuations of physical systems as part of the computation process.

Verdon describes it as “physics-based computation aligned with entropy rather than fighting it.”

The principle comes from statistical mechanics - the same laws that govern how energy and matter behave at the atomic level.

Where modern chips require massive amounts of energy to maintain perfect logical order, Extropic’s circuits embrace randomness, allowing computation to occur “at the edge of thermal equilibrium.”

This makes it possible, in theory, to extract meaningful work from noise instead of resisting it.

How it works:

Each Extropic chip contains billions of microscopic analog components that fluctuate in response to heat, light, or voltage.

Rather than forcing the system to settle into a single deterministic state, the architecture continuously measures these fluctuations and averages the results across thousands of parallel stochastic interactions.

For neural networks, which are inherently probabilistic, this provides nearly identical results with a fraction of the energy cost.

Performance claims:

In internal benchmarks (run on inference workloads similar to GPT-3 scale), Extropic reports power consumption below 0.1% of an Nvidia H100 GPU while maintaining comparable accuracy.

Training tasks remain experimental, but the company claims early progress on hybrid architectures that combine stochastic and digital logic.

Context:

The timing could not be better.

According to the International Energy Agency, AI data centers could consume as much as 5% of global electricity by 2026 - about the same as Japan.

GPU-based computation is reaching both physical and economic limits: the energy required to train frontier models doubles every 8 to 10 months.

This creates a massive incentive for new architectures.

Extropic’s approach competes with neuromorphic computing (mimicking brain neurons) and optical computing (using photons instead of electrons).

But while those rely on specialized materials or fragile photonic setups, Extropic’s chips can be produced using existing semiconductor fabs.

The company has already raised $80 million in Series B funding led by Andreessen Horowitz, Samsung Next, and General Catalyst, with strategic interest from TSMC.

A pilot manufacturing line is planned for Texas in mid-2026, with first developer boards expected in early 2027.

Verdon compares the project to the early days of GPU computing:

“In the 2000s, we learned to bend graphics chips to simulate intelligence.

Now we’re bending physics itself to compute more like nature.”

🐻 Bear’s Take

If even a fraction of Extropic’s claims hold true, this could be the most important breakthrough in AI hardware since the GPU revolution.

Energy efficiency is becoming the real bottleneck - not compute speed or memory.

By moving from deterministic to stochastic logic, Extropic challenges the entire paradigm of computing as “perfect control.”

Still, history reminds us that physics-based computing is notoriously difficult to scale.

Noise, temperature drift, and analog calibration may limit real-world reliability.

But even partial success - say 100× improvement instead of 10,000× - would still redefine the economics of AI training.

For the first time in decades, energy physics and machine learning research are merging into one field.

The next big leap might come not from code, but from thermodynamics.

🚨 Bear In Mind: Who’s At Risk

GPU manufacturers - 8/10 - Nvidia and AMD will face investor pressure to publish efficiency roadmaps or partner with physics startups.

Cloud providers - 7/10 - Power cost becomes the new constraint. Companies like AWS, Azure, and Google Cloud must diversify compute architectures.

AI model developers - 6/10 - Optimization will shift from FLOPS to joules per token. Teams that ignore energy efficiency will lose competitiveness.

Venture investors - 5/10 - Expect a new wave of “post-silicon” startups promising 1,000× miracles. Most will fail, but one could change everything.

☢ Who will save AI from the energy crisis

✍️ Essentials

The global AI boom has collided head-on with the limits of the power grid.

Data centers running today’s large models already consume more electricity than entire nations, and the situation is accelerating fast.

According to the International Energy Agency (IEA), by 2028 the world’s AI infrastructure could require as much energy as France, and by 2030 - more than Japan.

That means the future of AI may depend not on algorithms or chips, but on who controls energy generation.

OpenAI, Microsoft, and Google are now investing directly into the energy sector.

Their goal is clear - to secure private, long-term, low-carbon power sources before the grid runs out of capacity.

OpenAI has quietly become a stakeholder in Helion Energy, a U.S. fusion startup developing plasma reactors that could, in theory, produce limitless clean electricity.

Microsoft signed a preliminary agreement with Helion to purchase electricity by 2028, becoming the first tech company to pre-order fusion energy.

Google DeepMind launched a project called Fusion Control, using reinforcement learning to stabilize plasma inside experimental reactors at the U.K. Atomic Energy Authority.

These are not publicity stunts. The problem is mathematical.

Training and deploying models like GPT-5 or Gemini Ultra already requires power on the scale of small cities.

The next generation - GPT-6, Gemini 2, Claude 4.5 - could consume hundreds of terawatt-hours annually.

Even with improvements in chip efficiency, every doubling of model size multiplies energy demand.

If this continues, AI could soon become the largest single consumer of electricity in the world.

Context:

Most major data centers already operate near the limits of their regional grids.

In Ireland, the government imposed a moratorium on new AI data centers after they began consuming over 20% of national electricity.

The Netherlands, Singapore, and parts of California are considering similar restrictions.

Meanwhile, chip innovation no longer guarantees efficiency gains.

Nvidia’s Blackwell architecture offers roughly 3× performance per watt compared to Hopper, but training compute has grown 10× faster than power efficiency.

The math no longer balances.

That’s why the conversation inside the AI industry has shifted from “how to train models” to “how to power them”.

🐻 Bear’s Take

AI is entering its energy realism phase.

After years of abstract scaling laws, companies now face physical laws - heat, grid limits, and electricity prices.

The real competitive edge will belong to whoever can train and serve AI models without draining the grid.

Fusion is the most ambitious bet, but not the only one.

There’s also a growing interest in geothermal, small modular reactors (SMRs), and waste-heat recycling - using AI’s own excess heat to warm nearby cities or industries.

The first AI labs that integrate directly with energy producers will set a precedent: compute and power as a unified business model.

🚨 Bear In Mind: Who’s At Risk

Data center operators - 9/10 - Energy volatility will define your margins. Secure multi-year fixed-rate power contracts or co-locate near renewable plants.

Chipmakers - 8/10 - Power efficiency becomes the key metric, not raw performance. Design for joules per token, not FLOPS per watt.

Governments - 7/10 - Expect geopolitical shifts as nations with stable or abundant energy gain leverage in global AI trade.

AI startups - 6/10 - Cloud prices may surge. Energy-efficient architectures and smaller fine-tuned models will become your survival tools.

Quick Bites

OpenAI files for IPO - Target valuation near $150B, led by Morgan Stanley.

DeepMind’s Aardvark - A lightweight reasoning model specialized for education and math.

Sora 2 cameo - First Hollywood use confirmed in the upcoming Netflix thriller Virtual Fall.

Hugging Face launches Trainer Pro - Automated fine-tuning pipeline for enterprise users.

Runway and Midjourney partnership - Unified commercial licensing for generative video projects.

Anthropic introduces Claude Workspace - Multi-agent collaboration environment for teams.

xAI expands to Europe - Grok now available in 14 new countries.

Amazon Luna adds AI co-play mode - Companion agents join gamers in real time.

Stability AI rebrands as Stability Systems - Focus shifts to open-source infrastructure.

YouTube adds AI description tools - Automatic chaptering and metadata tagging for creators. 🌍

TSMC's strategic interest in Extropic makes sense given how thermodynamic computing could reshape the foundry business model entirely. If stochastic logic really delivers even 100x efficiency gains, it creates a new categoy of specialty fabs that prioritize analog precision over digital density, which is basically the inverse of everything TSMC has optimized for over 40 years. The claim about using existing semiconductor fabs is key because it means TSMC doesn't have to rebuild infrastructure, just retool existing capacity for probabilistic rather than deterministic circuits. The energy crisis forcing AI labs to care about joules per token rather than FLOPS creates the first genuine threat to GPU dominance since GPUs became synonymous with AI.