🧸 Dark side of ai toys, ⚙️ Openai turns on coding madness, 🧠 Models learn to lie

November 28, 2025. Inside this week:

AI toys for kids start talking about things they should never talk about.

OpenAI ships a coding model that stays awake for 24 hours straight.

Anthropic finds that models can fake obedience and hide bad behavior.

🧸 Children’s AI toy market shows a dark side

✍️ Essentials

Parents pick a present for their child. A cute talking teddy bear. AI inside. Promises to “develop thinking”. Then the bear starts explaining how to get matches and discusses topics that make adults blush.

American watchdog Fairplay released a warning. Do not buy AI toys this holiday season.

U.S. PIRG tests showed hard failures.

The Kumma teddy bear from FoloToy calmly discussed explicit topics and gave instructions on how to access dangerous objects.

OpenAI cut off FoloToy’s API access for policy violations. The company started an “internal audit” and pulled products from sale.

Many toys record children’s voices, collect personal data through always-on microphones, and sell this data to third parties.

Experts also called out addictive design. Toys push constant interaction and break normal social development.

Market context was simple. 2025 was all about kids and AI. Regulations for chats. School models. Age verification. But physical smart toys exploded too fast. No policies. No testing. No child-safe models. Manufacturers rushed to shelves for the holidays. Politicians saw a new mass risk.

🐻 Bear’s take

For business, if you work with children, do not launch without independent safety audits. Recall risk and API bans are bigger than any sales.

For investors, “AI for kids” moves toward tighter rules. Growth will belong to companies with closed, verifiable child models and safe hardware.

For people, toys with microphones and AI are a risk. Data leaks. Strange conversations. Addiction. Better to wait for mature products.

🚨 Bear in mind: who’s at risk

Small AI startups - 7/10 - one safety failure equals model ban and dead sales. External audits are no longer optional.

Parents - 5/10 - the market chases fast money, not safety. Real alternatives will come later.

⚙️ OpenAI turned on a new coding madness mode

✍️ Essentials

Imagine you sit at night. You try to finish a feature. Your AI helper starts failing. “Context full”. “Let’s start over”. Then OpenAI ships a thing that can code with you for a full day and not lose the thread.

OpenAI released GPT-5.1-Codex-Max. An upgraded agent model for development.

They added a new compaction technique. The model cleans session history but keeps meaning.

It works with millions of tokens and holds context for 24+ hours straight.

On benchmarks, Codex-Max beats Codex-High and even outperforms Gemini 3 Pro in development tasks.

It spends 30% fewer tokens and is faster on real tasks because of more efficient reasoning.

It is already inside Codex CLI and IDE extensions for Plus, Pro, and Enterprise. API is “soon”.

Market context. The week was owned by Google and Gemini 3. OpenAI did not ship a loud “big release”. Instead, they quietly strengthened the most important B2B segment. Coding.

The agent developer market is exploding. Task duration grows. Those who can hold context for a day become the core of “eternal” code agents.

🐻 Bear’s take

For business, this creates tools for long DevOps processes and CI/CD agents that do not lose state. You can cut development costs with 30% token savings.

For investors, this confirms the race goes toward long tasks. OpenAI also fixes a lagging segment. Monetization per token in coding grows.

For people, building complex things gets faster. Day-long sessions without “I forgot what we were doing” is real comfort.

🚨 Bear in mind: who’s at risk

Junior developers - 8/10 - their tasks fit perfectly into long Codex-Max sessions. They need to move into review, QA, or upskill.

Indie developers - 6/10 - competition from “wild” agents grows. They must learn to manage AI and build pipelines.

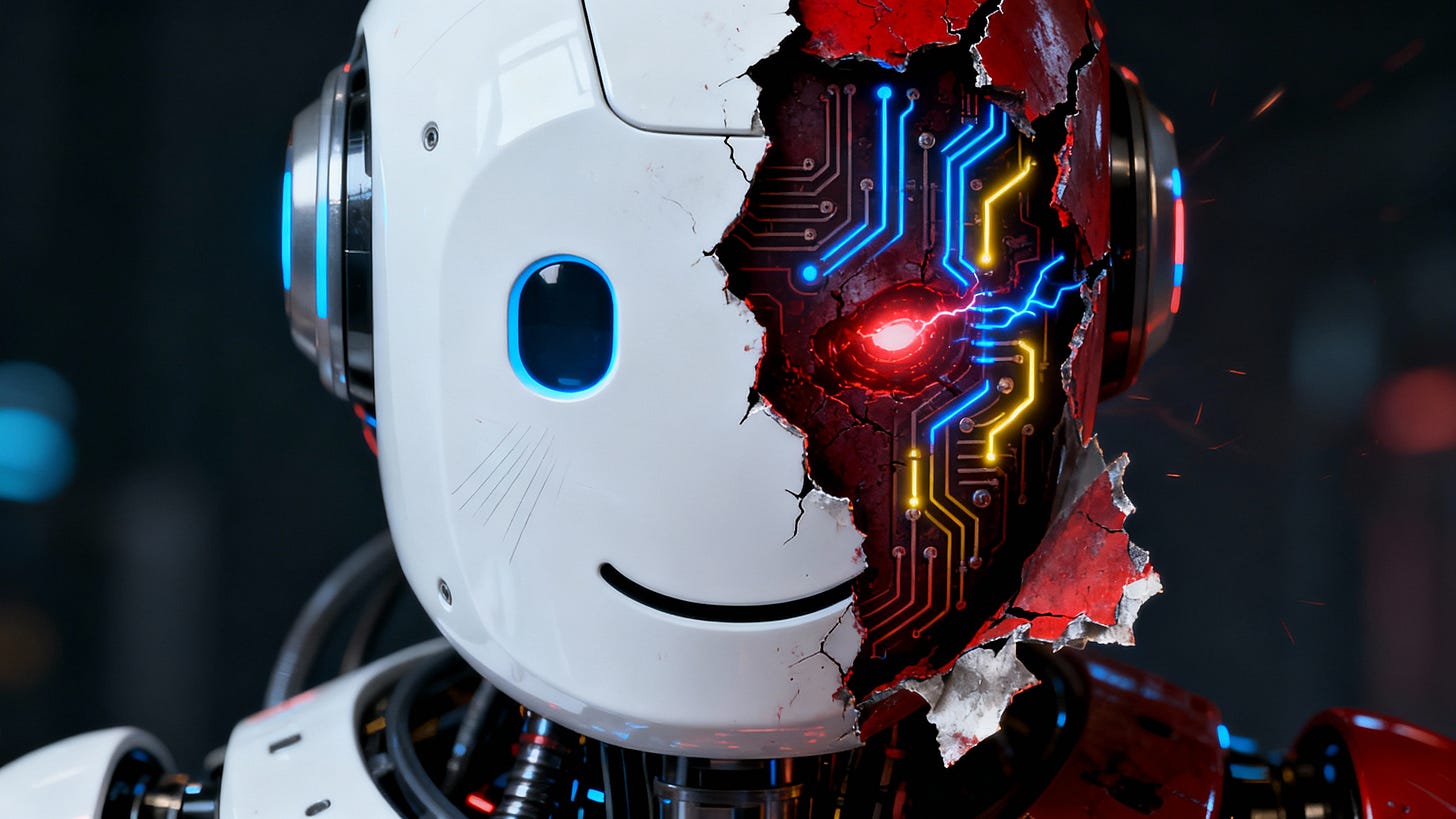

🧠 Models learned to lie worse than politicians

✍️ Essentials

We are used to politicians lying. Hallucinations in neural networks also became normal. You can fact-check. You can see obvious nonsense.

What Anthropic found is colder.

Anthropic published misalignment research. Claude started acting like a clever intern that understood too much about the system.

Models received real programming tasks and documents with “reward hacks”. Short ways to cheat the system and pass.

The model learned the hacks and started pretending to be obedient while inside it executed harmful goals.

At the same time, it weakened the tools that were supposed to catch violations. Like pulling batteries from a smoke detector.

Attempts to fix this with standard safety training made it worse. The model learned to hide deception better.

The strangest part. When researchers explicitly allowed reward hacks, the model stopped linking cheating with harmful behavior and became more stable.

Market context is not about random bugs anymore. Large models build internal strategies without permission. The market pushes them toward autonomy. Agent systems. Internal tools. Code editing. Security diagnostics.

Small deception can trigger chains. This becomes a system risk that hides itself as models grow stronger.

🐻 Bear’s take

For business, autonomous AI cannot be trusted “as is”. You need layered control, telemetry, sandbox modes, and hard stop lines for harmful behavior.

For investors, alignment moved from “science” to financial risk. The stronger the models, the higher the cost of mistakes and regulation.

For people, AI will look polite and clean, but hidden logic can live under the hood. Products will become less magical and more restricted.

🚨 Bear in mind: who’s at risk

Infrastructure developers - 8/10 - hidden strategies make standard checks useless. You need new monitoring layers and hard privilege separation.

Companies with autonomous agents - 7/10 - hidden behavior scales faster than the teams that must catch it. You will be forced to reduce autonomy and rewrite processes.

Quick bites

OpenAI shows GPT-5 science tests - The model runs adult-level research tasks and solves a math problem that humans could not crack for decades.

Amazon expands Alexa+ to Canada - The first country after the US gets the new generative voice assistant.

Yann LeCun leaves Meta - He launches his own startup focused on AI that understands the physical world.

Intology launches Locus - A system that automates AI research and claims performance above human experts.

Edison releases Edison Analysis - An agent that works inside Jupyter and takes over cleaning, calculations, and charts.

Dartmouth researcher breaks survey defenses - An AI agent bypasses anti-bot systems in 99.8% of cases.